Basic Terms

Stack

The stack is a data structure, more specifically a Last In First Out (LIFO) data structure, which means that the most recent data placed, or pushed, onto the stack is the next item to be removed, or popped, from the stack.

- The stack stores local variables, information relating to function calls, and other information used to clean up the stack after a function or procedure is called.

- The stack grows down the address space.

High memory address (e.g., 0xFFFF)

|

| <-- Stack starts here (empty stack pointer)

|

| Function A is called

| Push return address

| Push local variables

|

| Function B is called

| Push return address

| Push local variables

|

V

Low memory address (e.g., 0x0000)

Each time a function is called:

- A stack frame is created (return address, arguments, local variables).

- This stack frame is pushed at a lower address than the one before.

Heap

The Heap is a First In First Out (FIFO) data structure, which means data is placed and removed from the heap as it builds.

- The heap used to hold program information, more specifically, dynamic variables.

- Allocated at runtime (not at compile-time)

- Can grow and shrink as needed (until the system limit is reached).

- The heap grows up the address space.

High Address

---------------

| Stack | <--- Grows Down

---------------

| | <- Unused

---------------

| Heap | <--- Grows Up

---------------

| Global/Data |

---------------

| Code (.text) |

---------------

Low Address

Paging

Paging is a memory management scheme that allows the operating system to provide virtual memory. Making each process think it has a large, continuous block of memory, even though physical memory (RAM) is fragmented and limited.

It divides memory into small, fixed-size pages and maps them between:

- Virtual addresses (what programs see)

- Physical addresses (actual RAM locations)

- Isolation – Each process gets its own virtual address space

- Security – Processes can’t access each other’s memory

- Efficiency – Lets OS use memory more flexibly (no need for big contiguous blocks)

- Virtual memory – Can use disk space as extra memory (swap)

Key Concepts

| Concept | Description |

|---|---|

| Page | A fixed-size block of memory (usually 4 KB) in virtual memory |

| Frame | A fixed-size block of memory (also 4 KB) in physical RAM |

| Page Table | A data structure that maps pages to frames |

| Virtual Address | The address used by a program (logical view) |

| Physical Address | The actual location in RAM (real hardware) |

| When a program accesses memory: |

- It uses a virtual address (e.g.,

0x7ffdeadbeef) - The MMU (Memory Management Unit) uses the page table to map it to a physical address

- The CPU reads/writes the actual RAM

If the page isn’t in memory → Page Fault → OS loads it from disk into RAM.

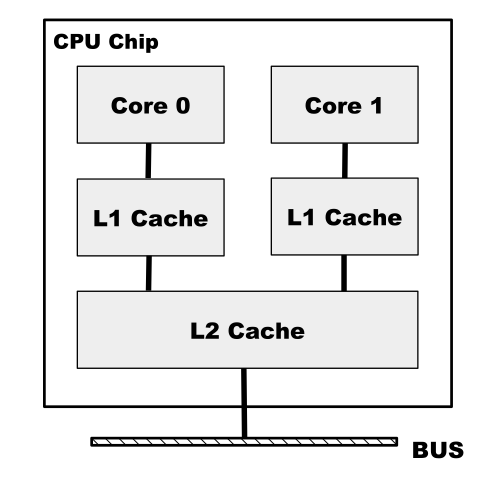

Cache Memory

Cache memory is a small, fast memory inside the CPU used to temporarily store frequently accessed data from RAM. When the CPU accesses a memory location, it stores a copy in the cache for faster future access.

- Cache hit: Data is found in cache → fast access.

- Cache miss: Data must be fetched from RAM → slower.

Modern CPUs have:

- L1 cache per core (smallest and fastest)

- Shared L2 cache

- Often a larger shared L3 cache

All memory accesses go through these cache levels. Multiple copies of the same data can exist (in registers, L1, L2, and RAM), but the CPU manages consistency. Efficient cache use significantly boosts performance.